Three-Dimensional Kaleidoscopic Imaging

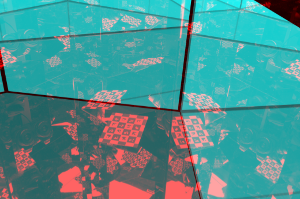

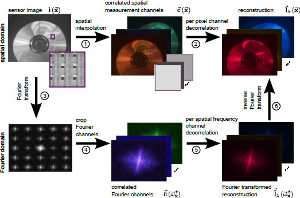

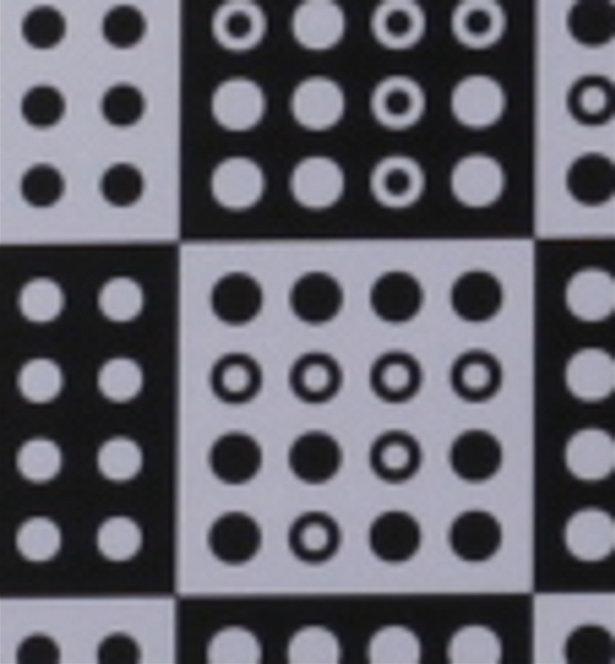

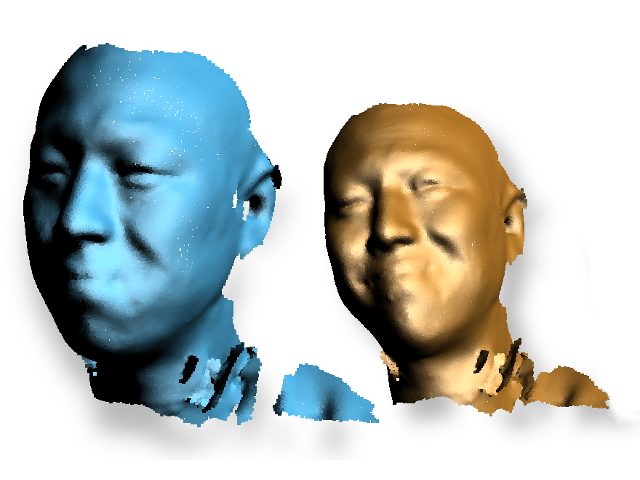

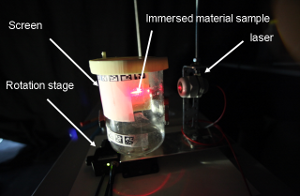

Three-dimensional kaleidoscopic imaging, a promising alternative for recording multi-view imagery. The main limitation of multi-view reconstruction techniques is the limited number of views that are available from multi-camera systems, especially for dynamic scenes. Our new system is based on imaging an object inside a kaleidoscopic mirror system. We show that this approach can generate a large number of high-quality views well distributed over the hemisphere surrounding the object in a single shot. In comparison to existing multi-view systems, our method offers a number of advantages: it is possible to operate with a single camera, the individual views are perfectly synchronized, and they have the same radiometric and colorimetric properties. We describe the setup both theoretically, and provide methods for a practical implementation. Enabling interfacing to standard multi-view algorithms for further processing is an important goal of our techniques.

Projects

Zhao Dong, Wei Hu, Ivo Ihrke, Thorsten Grosch, Hans-Peter Seidel

In: Proceedings of I3D 2010.

Go to project listIn: Proceedings of I3D 2010.

Abstract

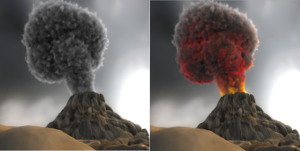

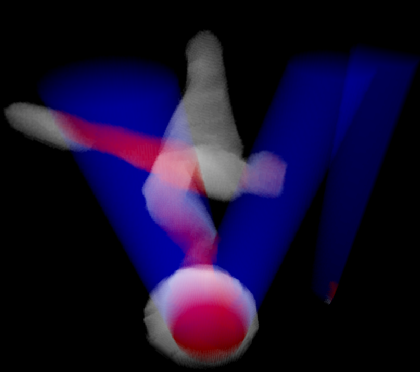

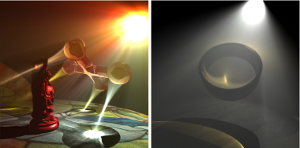

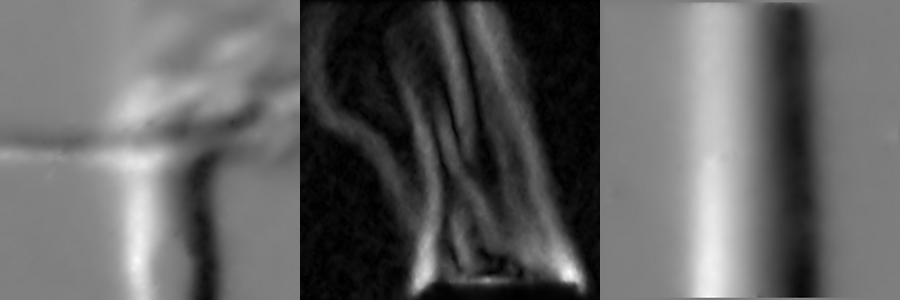

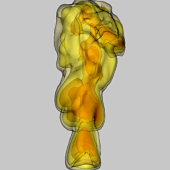

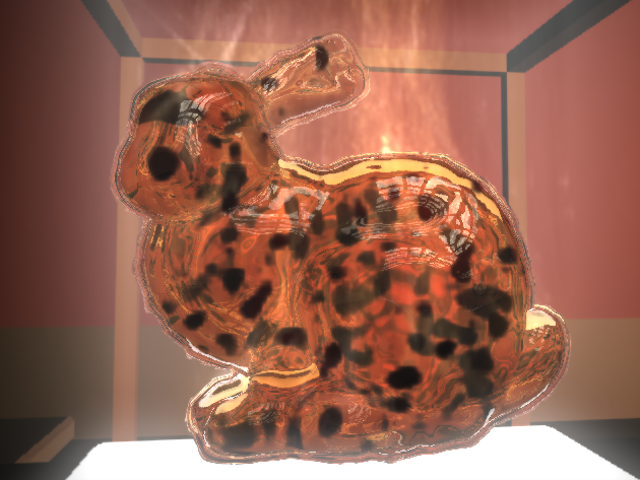

Volume caustics are intricate illumination patterns formed by light

first interacting with a specular surface and subsequently being scattered inside a participating medium. Although this phenomenon

can be simulated by existing techniques, image synthesis is usually

non-trivial and time-consuming.

Motivated by interactive applications, we propose a novel volume

caustics rendering method for single-scattering participating media.

Our method is based on the observation that line rendering of illumination rays into the screen buffer establishes a direct light path

between the viewer and the light source. This connection is introduced via a single scattering event for every pixel affected by the

line primitive. Since the GPU is a parallel processor, the radiance

contributions of these light paths to each of the pixels can be computed and accumulated independently. The implementation of our

method is straightforward and we show that it can be seamlessly

integrated with existing methods for rendering participating media.

We achieve high-quality results at real-time frame rates for large

and dynamic scenes containing homogeneous participating media.

For inhomogeneous media, our method achieves interactive performance that is close to real-time. Our method is based on a simplified physical model and can thus be used for generating physically

plausible previews of expensive lighting simulations quickly.

Project Page Video Bibtex

@INPROCEEDINGS{HDI:2010:VolumeCaustics,

author = {Hu, Wei and Dong, Zhao and Ihrke, Ivo and Grosch, Thorsten and Yuan, Guodong and Seidel, Hans-Peter},

title = {Interactive Volume Caustics in Single-Scattering Media},

booktitle = {I3D '10: Proceedings of the 2010 symposium on Interactive 3D graphics and games},

year = {2010},

pages = {109--117},

publisher = {ACM},

}

author = {Hu, Wei and Dong, Zhao and Ihrke, Ivo and Grosch, Thorsten and Yuan, Guodong and Seidel, Hans-Peter},

title = {Interactive Volume Caustics in Single-Scattering Media},

booktitle = {I3D '10: Proceedings of the 2010 symposium on Interactive 3D graphics and games},

year = {2010},

pages = {109--117},

publisher = {ACM},

}